K.A.R.L

Header by Justin Kunimune - Own work, CC BY-SA 4.0

K.A.R.L is a web app that helps to divide 360-degree panoramas into sections (sky, ground, trees, etc.) and then determine the proportion of each section in the overall image.

Introduction

The project emerged from a collaboration with two friends. One of them is in the conceptual phase of his bachelor’s thesis (Geography), which deals with the impact of vegetation on urban climate. To this end, he conducted albedo measurements at various locations in Cologne—essentially measuring “how much light comes from the sky, and how much of it is reflected by the ground.” To put these measurements into context, he took 360-degree panoramas at each measurement location, which look something like this:

He then needed data on what percentage of the view was occupied by vegetation, sky, and ground. To measure this, he manually created a segmentation map in Gimp, which looks something like this:

Problem Statement

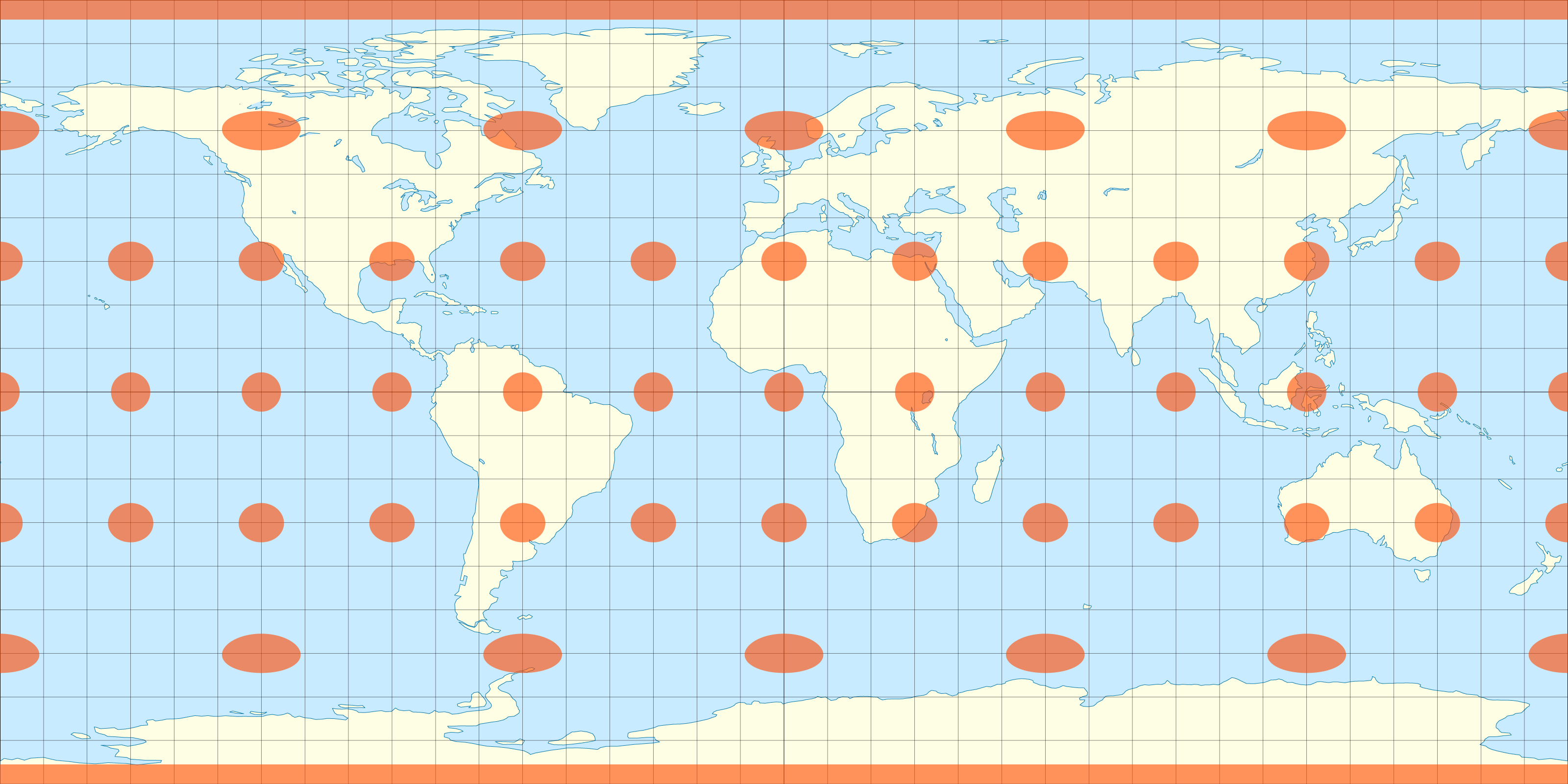

If we naively count the pixels of each color and use that to calculate a percentage distribution, we encounter the classic distortion problem that humanity has faced for centuries with maps. Spheres do not like being represented in two dimensions, which always leads to distortions, as visualized in the following image.

Fortunately, this distortion only occurs in width, so we need a formula that provides a weight for the height of a pixel to compensate for this distortion. After many attempts, we arrived at this formula:

/*

height: height of the image in pixels

calibrationFactor: 1.333, somehow this works, don't ask why, we don't either

y: y position of the pixel

*/

const pixelValue = Math.cos(

(((360 / height ** 2) * y ** 2 + (-360 / height) * y + 90) / 360) *

(2 + calibrationFactor) *

Math.PI

)This formula is actually designed to calculate the distance between two longitudes for a given latitude, but it works very well for our purposes.

Here are some of the first attempts in Desmos (fantastic tool, by the way):

Technologies

I wanted to build a web app that theoretically works completely offline. Therefore, after the initial page load, no further data is sent; everything runs in the browser. This is challenging for an application that processes many pixels and requires a lot of performance since browser resources are limited. The following technologies helped to keep the user experience reasonably smooth:

Canvas

When working with pixels in the browser, you can’t really avoid Canvas2D. Its programmatic interface makes it ideal for this job.

Web Workers

Normally, all operations of a website run in a single thread in the browser. This means that a computationally intensive task can bring the entire site to a halt. Web Workers provide a solution by allowing arbitrary code to run in a separate thread, though communication with the main thread can be tricky.

K.A.R.L uses two Web Workers: the pixel-worker handles segmentation map analysis and the fill tool, while the ai-worker executes TensorFlow code.

TensorFlow

Why manually color segments when the computer can do it automatically? That was my thought, so I integrated TensorFlow with the ADE20K dataset. This network is excellent at recognizing trees, ground, concrete, and sky. In the editor view, this function is hidden under the “AI” button on the right.

IndexDB

When it comes to storing large amounts of data (especially images) locally in the browser, IndexedDB is the best option (yes, localStorage with Base64 images could work too, but it’s limited to 5MB in some browsers). However, the IndexedDB API is one of the most confusing browser APIs out there, so I use idb, a fantastic small wrapper library for IndexedDB that also supports Promises.

Interesting Features…

Flood Fill Algorithm

After manually segmenting several panoramas using the tools, I noticed that I often just painted regions with similar colors. This gave me the idea to build a fill tool similar to Photoshop, but much better.

The tool works roughly as follows:

- The user clicks on the image with the fill tool.

- The x/y coordinates of the click and all pixels of the image are sent to a Web Worker.

- The Web Worker creates a grayscale image where each pixel value represents the spatial and color distance to the clicked region.

- The image is sent back.

- The canvas code then determines whether individual pixels should be filled based on their values.

Svelte Bindings

I always found it complicated to manage state across multiple components. A good example is the editor, which consists of three separate components (Toolbar, Topbar, PaintArea), all of which need to know which color and tool are currently active. One could store this state globally and let each component access it, but in reality, the active tool is only relevant in the editor context. So now, this state resides in the editor component and is passed down to its subcomponents via binding. It looks something like this:

<!-- Editor.svelte -->

<script lang="ts">

import TopBar from "./TopBar.svelte";

let activeColor = "ff0000";

let _activeColor = localStorage.getItem("activeColor");

if (_activeColor) {

activeColor = _activeColor;

}

</script>

<TopBar bind:activeColor/>

<!-- TopBar.svelte -->

<script>

export let activeColor = "ff0000";

</script>

{{activeColor}}Svelte Stores

For some other cases, stores work really well, such as for toasts/modals. This allows the toast state (the small messages at the bottom left) to be managed in a single component without having to distribute it across multiple components.